library(openai)

library(png)

library(RCurl)

library(magrittr)Introduction

I’ll admit to it: I am an absolute R addict. But I’m also very much interested in the capabilities of artificial intelligence, both for text as well as image creation. So, when (1) recently created an R wrapper for the OpenAI API, I had to start toying with it. In this blog post, I’ll quickly write down how you might as well use these tools to quickly generate a bunch of inspirational paintings which will be automatically downloaded into your working directory and bound together to a nicely-looking bundle.

As a test case, we will continuously ask the models to imagine what the results could have looked like if Claude Monet had travelled the wilderness in today’s Joshua Tree National Park. We could of course ask anything of these models, but so far, I’m really quite happy with the paintings resulting from this specific scenario.

In order to run the R code shown below, you’ll have to activate the following four packages.

Set up your OpenAI API

Before you can try this yourself, you’ll have to set up an OpenAI account. The company is charging for the usage of their models, however, for sporadic usage, it’s not very pricey. Using gpt-3.5-turbo (a faster version of ChatGPT) currently costs 0.002 USD for 1000 generated tokens1, whereas images created by DALL-E 2 go for two cents each.

1 In the context of large language models like GPT-3, a token refers to a unit of text that the model processes during both the training and inference stage. Tokens can be individual characters, words, or subwords, depending on the tokenization method used. In the case of OpenAI’s tokenization, one word in English equals about 1.33 tokens on average.

The wrapper for the OpenAI API is called openai and can be downloaded directly from CRAN (1). In order to use the API, you’ll have to provide an API key. For this, sign up for the OpenAI API, and generate your personal secret API key on this site (Personal, and select View API keys in drop-down menu). You can then copy the key by clicking on the green text Copy.

The secret key will be used in every function that calls a model via the API, but alternatively, you may also set up a global variable such that you only pass the key once.

Sys.setenv(OPENAI_API_KEY = "<SECRET KEY>")Make sure to replace <SECRET KEY> with your actual secret API key. Once this is done, you will be able to use OpenAI’s models directly from within R, and you won’t have to pass the API key to any functions from the opanai package.

Automatic prompting

In a first step, we want to make use of the large language model ChatGPT. How could such a model help us with images? Well, we could use it to elaborate on a very simple prompt. Hence, we will write a function in R which takes a very simple prompt and re-writes it in a much more elaborate way. This procedure adds some details and variation to a very basic prompt that might make the result more interesting. However, if you already know exactly what you want, this step is likely not necessary.

getPrompt <- function(task){

instruction <- paste0("Give a dalle prompt that will generate ",

task,

". Write maximally three sentences.

Use text only, no special characters.")

response <- openai::create_chat_completion(

model = "gpt-3.5-turbo",

messages = list(list(role = "user",

content = instruction))

)

return(response$choices$message.content)

}- 1

-

Make a detailed instruction. Note how most of this is fixed. The brief string called

taskwill be inserted into the more detailed instruction. - 2

-

Generate a prompt from the simple instruction using the model

gpt-3.5-turbo(i.e. the faster version of ChatGPT). - 3

- Return only the response of ChatGPT without any additional information, i.e., the generated prompt.

We can test this function with a very simple prompt. For example, we can ask ChatGPT to rephrase the very simple input "Joshua Tree park by Claude Monet" such that it includes more detailed instructions.

task <- "Joshua Tree National Park scenery painted by Claude Monet"

prompt <- getPrompt(task)Inspecting the very long character object prompt, we can see that it says the following:

"Create a painting inspired by Joshua Tree National Park in the style of Claude Monet's impressionism. Use a bright and colorful palette to capture the desert landscape and the play of light and shadow on the boulders and cacti."

This seems to work great! ChatGPT does add some elements to the prompt such as “boulders” and “cacti” that would not have been mentioned otherwise. Now, we can use this more elaborate text prompt as an input to DALL-E 2.

Generate a collection of paintings

DALL-E 2 is an artificial intelligence system that generates original images corresponding to an input text as caption (2). Researchers found that the model was able to create stunning images, but it seems to struggle much more with detailed instructions about the image content rather than with stylistic instructions (3). For our task, this does not matter that much, as the style is very much the focus.

First, we’ll create a function getImage which passes the previously created prompt to DALL-E 2 for the image generation. The model will save the generated image under a specific URL, which we will return with our function.

getImage <- function(prompt){

return(openai::create_image(prompt)$data$url)

}Hence, we can already create one single image, and subsequently download that image from the web directly into our working directory.

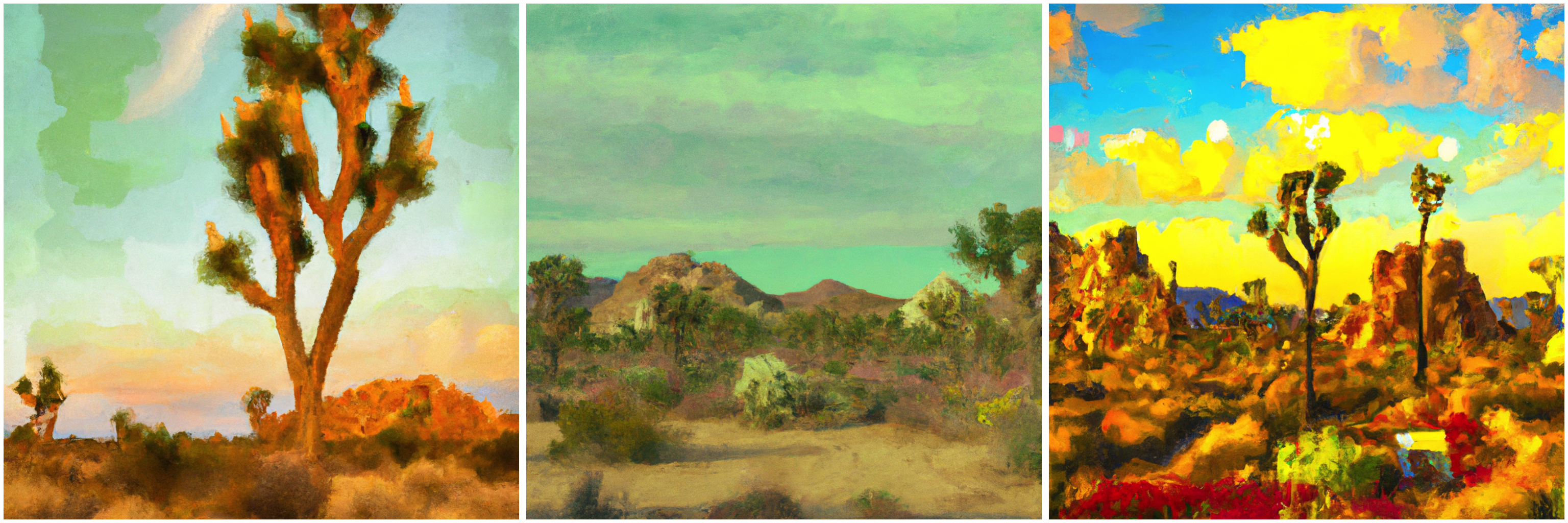

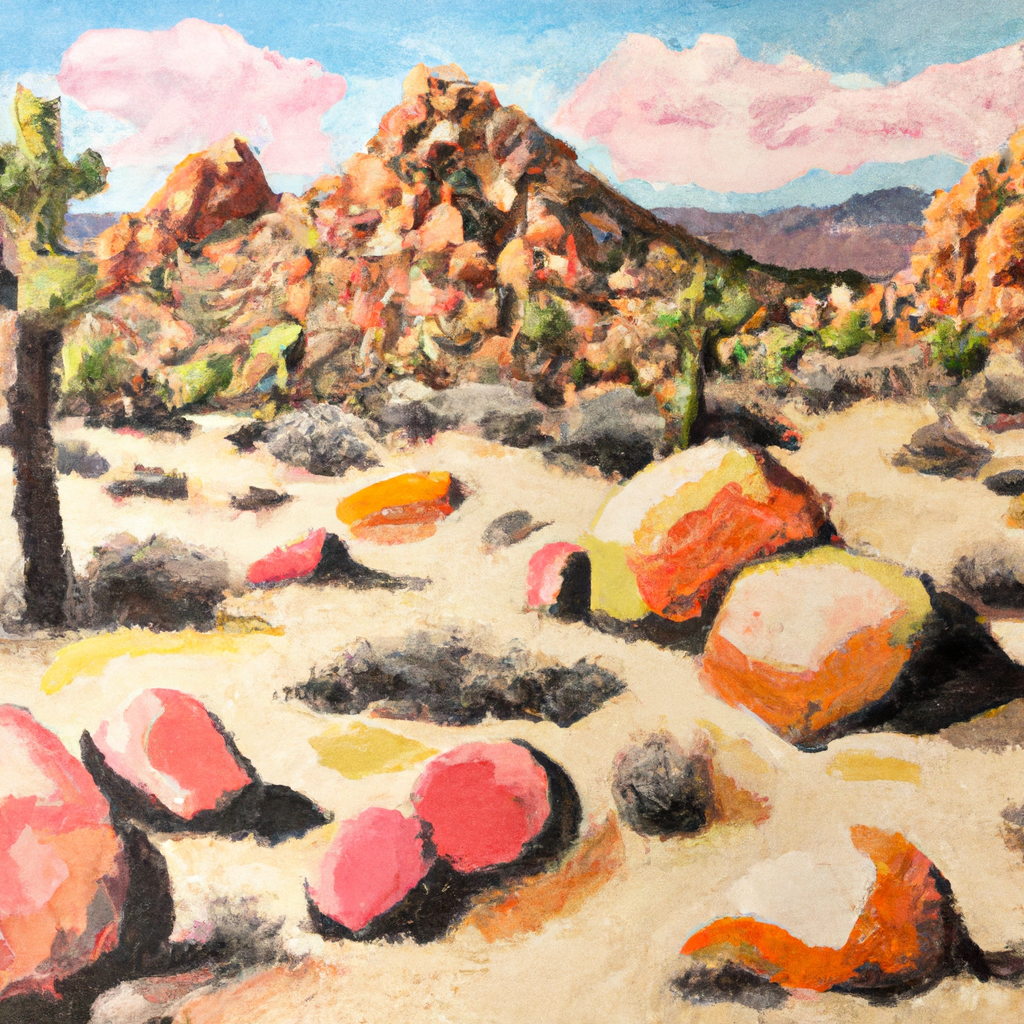

download.file(getImage(prompt), "Monet.png", mode = "wb")The downloaded image Monet.png could look something like this:  But keep in mind, since both the prompt and image generation are stochastic processes, your image might also look totally different.

But keep in mind, since both the prompt and image generation are stochastic processes, your image might also look totally different.

With the two functions getPrompt and getImage, we are good to go. Our goal is to repeatedly generate different prompts, and from these, generate different images. All the generated images should then be combined to a collection of paintings such that they are easily presentable.

Like this, we can run the function with the same task repeatedly using a for-loop. The following code will compile a bunch of randomly generated image and save the compilation as one png-file into your working directory. We can do this with a simple for-loop as demonstrated in the following code. The collection of paintings will be saved as Collection.png in your working directory. At the same time, the individual prompts and images will be saved as well.

cols <- 7

rows <- 3

png("Collection.png", width=cols, height=rows, res=600, unit="in")

par(mfrow=c(rows,cols), mar=rep(0.1,4), oma=rep(0.1,4))

for (i in 1:(rows*cols)) {

prompt <- getPrompt(task)

URL <- getImage(prompt)

name <- paste0("Monet_",i)

download.file(URL, paste0(name,".png"), mode = "wb")

writeLines(prompt, paste0(name,".txt"))

paste0(name,".png") %>%

png::readPNG() %>%

raster::as.raster() %>%

plot()

}

dev.off()- 1

-

Define the number of columns and rows that the final compilation will incorporate. The short program will subsequently prompt DALL-E 2

column * rowstimes, here that is 21 times. - 2

- Open a new png-file. Set the resolution in pixels per inch however you like, but keep in mind that the downloaded DALL-E 2 paintings will have a size of 1024 × 1024 pixels.

- 3

- Generate a detailed prompt using ChatGPT and subsequently an image with this detailed promt using DALL-E 2.

- 4

- Download both the generated image as well as the prompt used to generate the image into your working directory. This allows subsequent inspection into which promts lead to the better looking results and also retains the image with the original (full) resolution.

- 5

- Load the image into R, convert it to a raster object and plot the image in the plotting plane. This step is necessary to generate the compilation of images.

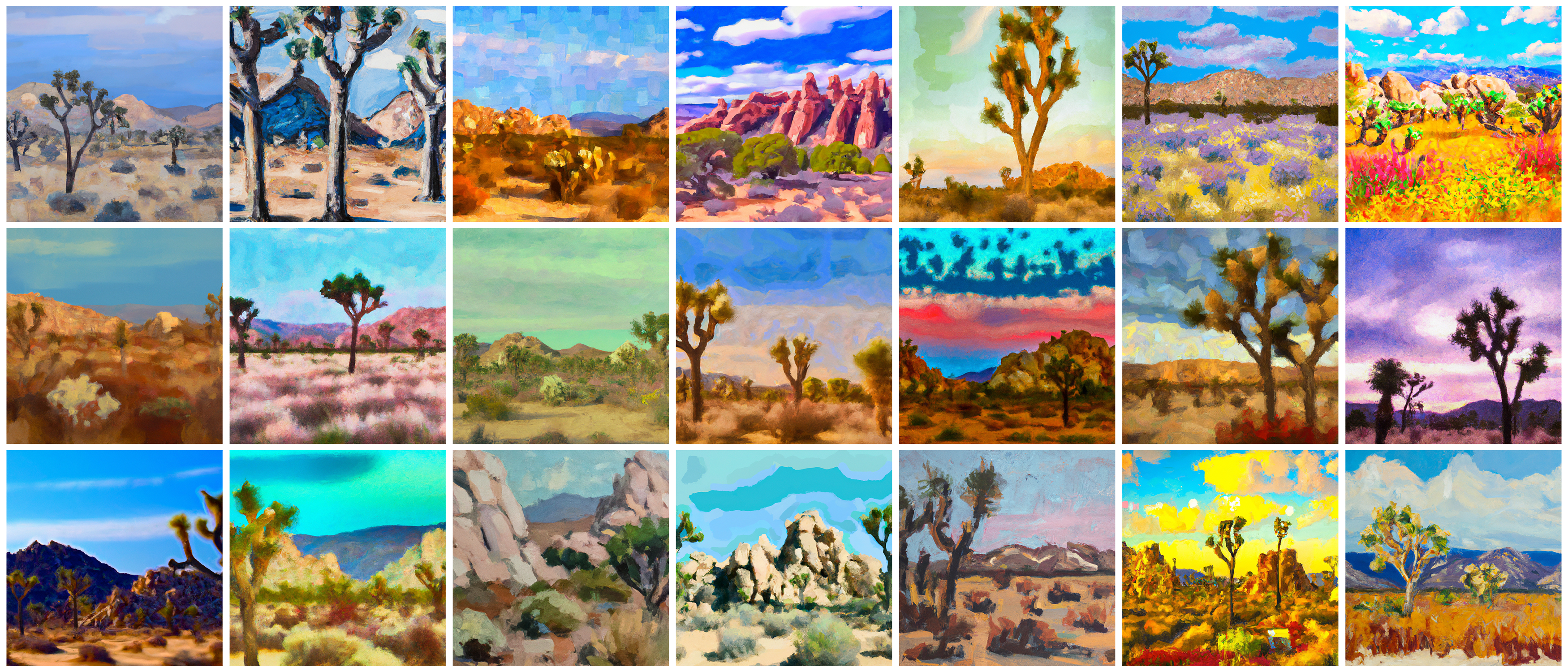

The execution of that code might take some time, as we have to wait for ChatGPT to generate a prompt, Dall-E 2 to generated the image from this prompt, and R to download that painting and plot it. The result might look somewhat like what you can see in figure 1.

We can now directly compare this result to an alternative, where I did not use ChatGPT for a prompt manipulation before submitting the prompt to DALL-E 2. This is very easy to achieve, you can simply omit the function getPrompt from the code above and directly define it yourself. In figure 2, we can see that the resulting images look stunning as well, but they do seem to have less variance in what they show, the exact painting style they capture, and in the colours as well. Also, we seem to get a very similar perspective with all the generated images.

"Joshua Tree National Park scenery painted by Claude Monet".

Since we saved the prompts together with the images, we can use them to study what effects certain expressions have on the outcome of the generated image. For example, the third painting in the first row was generated with a prompt including the words “bold brushstrokes and a bright palette”, which clearly affects the style. With the prompt variation, we can pick out images we like, read the prompt that was used to generate them and slightly change it for repeated usage.

Exploring alternative ideas

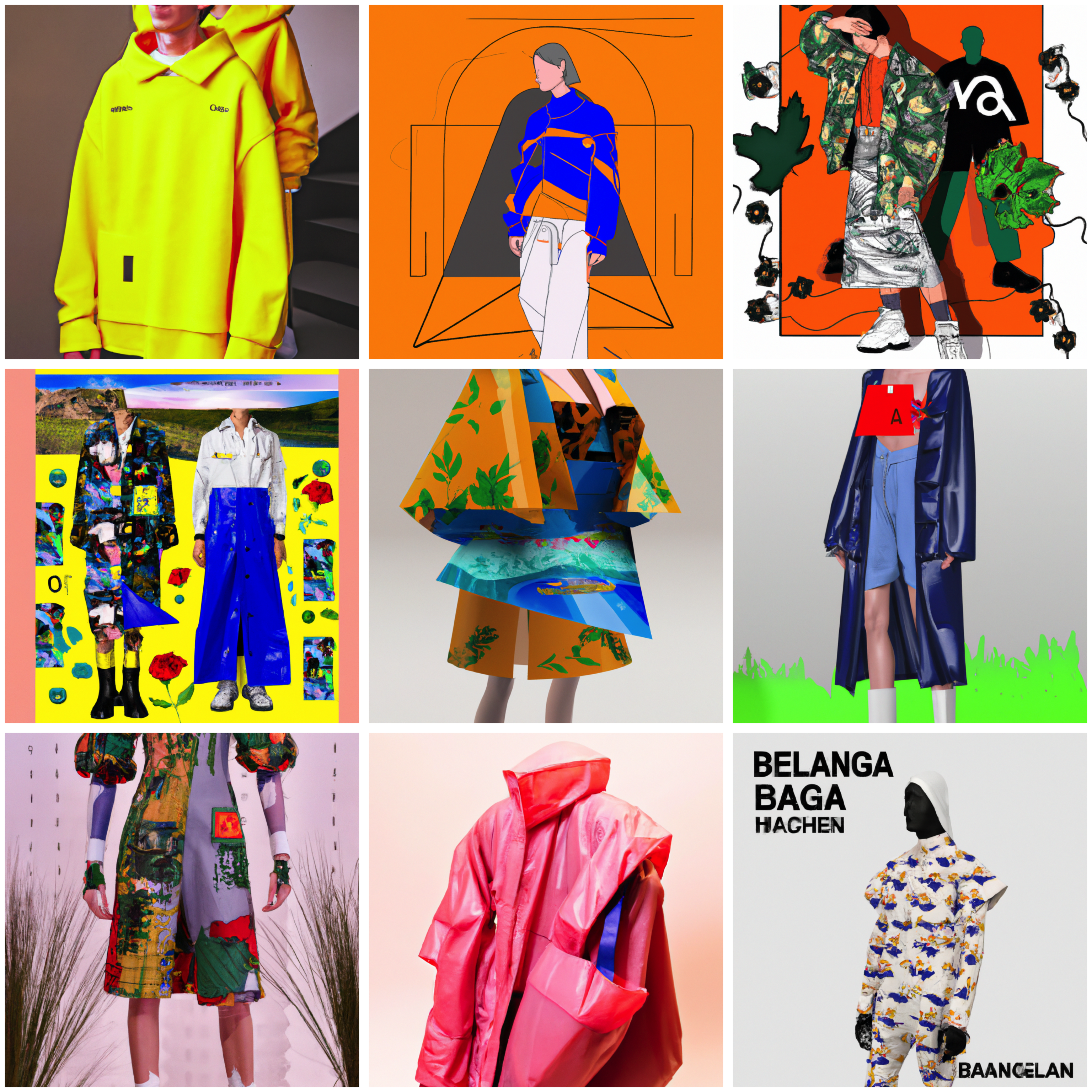

Up until now, our focus has been on envisioning Claude Monet traversing the arid landscape of Southern California. Nonetheless, we are not restricted to emulating the techniques of a single artist. Take, for instance, the possibility of seeking inspiration from the 2025 Balenciaga fashion show.

These Balenciaga designs look horrible, realistically mirroring the actual brand’s aesthetics! However, the clothing design ideas are much more colourful than one might be used from Balenciaga. Who knows, maybe ChatGPT knows more about pending fashion trends than all of us?

Conlusion

Throughout this blog post, we’ve discovered that the OpenAI API can be effortlessly accessed through the R package openai, allowing us to play with state-of-the-art generative models from within R. We have also seen that integrating ChatGPT with DALL-E 2 can yield a more diverse range of outcomes. This may be particularly appealing when seeking numerous variations of a simple prompt, the results of which we can use as an inspiration for a creative project.

References

Reuse

Citation

@online{oswald2023,

author = {Oswald, Damian},

title = {What If {Monet} Visited {Joshua} {Tree} {National} {Park?}},

date = {2023-05-05},

url = {damianoswald.com/blog/monet-joshua-tree},

langid = {en},

abstract = {With the release of an API to many of OpenAI’s models,

combined with a wrapper for R, we can run these models directly from

within R. This can be useful in order to automate prompting for

image generation. In this blog post, we will look at how something

like this might be done in order to automatically generate specific

images, so that we end up with a large selection of images to choose

from for any artistic project.}

}